1.What is Google webmaster tool and benefits of using?

Ans. It was on 20th May, 2015 that Google changed the name of Google Webmaster tools to Google Search Console.

Google Search Console is a free service offered by Google that helps you monitor and maintain your site's presence in Google Search results.

Google Search Console helps the business owners, SEO experts, site administrators and web developers to see the crawl errors, crawl stats, backlinks and malware with a click of a button. Webmasters Can Have 1000 Sites in Google Search Console.

Benefits of using Google Search Console

- Search Queries

- Links

- Crawl Errors

- Keywords

- Sitemaps

- Robots.txt Tester

- Google Index

- Remove URLs

How to Install Google Webmaster tools

To set up Webmaster Tools, visit http://www.google.com/webmasters/tools and sign in with your Google account. Click ‘Add a Property’. And enter your site’s URL.

2. How can I see what pages are indexed in Google?

Ans. There are two ways to see if the webpages of any specific website are indexed by Google.

1) One can check the Google Index Status of any specific website through Google Webmaster tools. After adding the website on the dashboard and verifying the ownership, clicking on the tab “Index status” would show the number of pages indexed by Google.

2) One can also perform a manual search on Google by typing on Google search bar site:domainname.com, and the number of pages indexed would reflect on the SERP.

Ans. Search Analytics - The Search Analytics Report shows how often your site appears in Google search results. Filter and group data by categories such as search query, date, or device. Use the results to improve your site’s search performance,

Choosing which metrics to display:

• Clicks • Impressions • CTR • Position

4. Search Appearance-(Structured data, Rich Cards, data highlighter, HTML Improvements, Accelerated Mobile Pages)

Ans. Accelerated Mobile Pages (AMP) are an open-source initiative to provide web pages that load fast and look good on mobile devices, even over slow networks. Accelerated Mobile Pages (AMP) are lightweight pages designed to load quickly on mobile devices.

5. Google Index(Index Status, Blocked Resources, Remove URLs)

Ans. Check the Google Index Status of any specific website through Google Webmaster tools.

6. Crawl : Crawl error(site error, url error), Crawl Stats, Fetch as Google(Fetch, fetch and render), robots.txt Tester, Sitemaps, URL Parameters,)

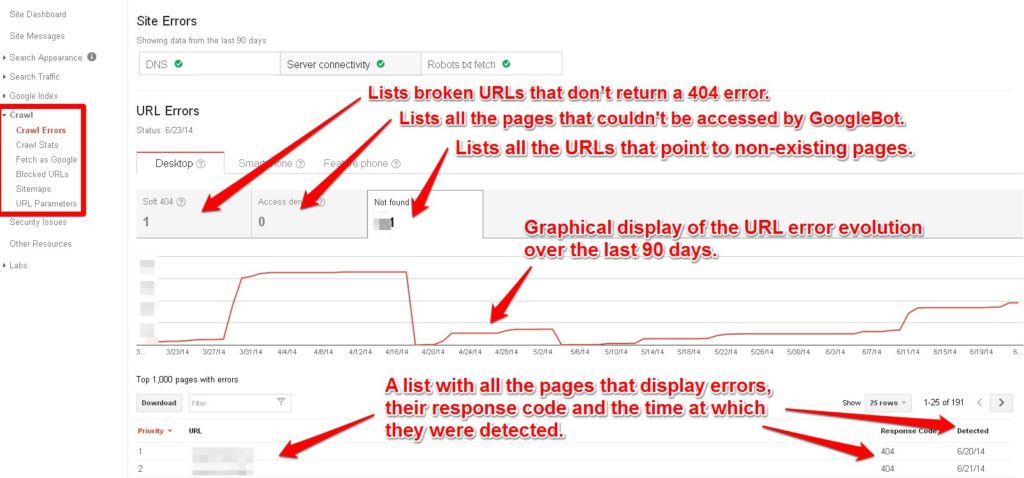

Ans. The Crawl Errors report for websites provides details about the site URLs that Google could not successfully crawl or that returned an HTTP error code.

Site errors: This section of the report shows the main issues for the past 90 days that prevented Googlebot from accessing your entire site.

URL errors: This section lists specific errors Google encountered when trying to crawl specific desktop or phone pages. Each main section in the URL Errors reports corresponds to the different crawling mechanisms Google uses to access your pages, and the errors listed are specific to those kinds of pages, you can fix this error.

Fetch as Google(Fetch, fetch and render):

Fetch is a Google tool which enables you to test how Google renders a URL on your site. You can use Google Fetch to see whether Googlebot can access a page on your site, how it renders the page, and whether any page resources are blocked to Googlebot.

The Fetch as Google tool enables you to test how Google crawls or renders a URL on your site. You can use Fetch as Google to see whether Googlebot can access a page on your site, how it renders the page, and whether any page resources (such as images or scripts) are blocked to Googlebot.

Fetch is a Google tool which enables you to test how Google renders a URL on your site. You can use Google Fetch to see whether Googlebot can access a page on your site, how it renders the page, and whether any page resources are blocked to Googlebot. - See more at: https://mytasker.com/blog/seo-interview-questions-and-answers/#sthash.wBuUG7Mi.dpuf

This tool can be effectively used for the following purposes:

- Troubleshooting webpages to improve the performance in SEO.

- Submitting pages for indexing whenever the content is changed significantly.

- Finding out the pages affected by any malware attack.

You can fetch your homepage by leaving the box blank, or any other directory or page you designate in your site’s interior. This will help you to see whether there are any issues with connectivity or security, and it’s pretty quick. Takes less than 30 seconds, and spits back your page in code, which is what Googlebot sees.

When you use the “Fetch and Render” option, Google will tell you about elements that spiders can’t see or are blocked from seeing. Googlebot runs through the page (or set of pages you designate by entering a directory) and all the links on or in it. In it, meaning behind the face of your page, in the code. Links can be to images, separate CSS files, or JavaScript or other codes.

See here: https://www.semrush.com/blog/googles-fetch-and-render-why-its-important/

7. What is Search Queries?

Ans. The words and phrases that people type into a search box in order to pull up a list of results – come in different flavors. A search query or search term is the actual word or string of words that a search engine user types into the search box. It is commonly accepted that there are three different types of search queries:

Navigational search queries

Informational search queries

Transactional search queries

8. Structured Data Testing Tool

Ans. Google tool to validate and test structured data in websites. https://search.google.com/structured-data/testing-tool

9. How To Use Rich Snippets, Structured Markup For High Powered SEO?

Ans. See here: http://searchengineland.com/how-to-use-rich-snippets-structured-markup-for-high-powered-seo-99081

10. How to add Sitemap?

Ans. Generate XML sitemap of website, Go to Crawl section, click sitemap , Add/Test Sitemap and submit or test.

Ans. Use Yoast’s WordPress SEO plugin to generate our sitemaps.

12. How to create website if have more than 50,000 url?

Ans. All formats limit a single sitemap to 50MB (uncompressed) and 50,000 URLs. If you have a larger file or more URLs, you will have to break your list into multiple sitemaps. You can optionally create a sitemap index file (a file that points to a list of sitemaps) and submit that single index file to Google. You can submit multiple sitemaps and/or sitemap index files to Google. Create sitemap1 , sitemap2

If you have many sitemaps, you can use a sitemaps index file as a way to submit them at once. The XML format of a sitemap index file is very similar to the XML format of a sitemap file. The sitemap index file uses the following XML tags:

- sitemapindex - the parent tag surrounds the file.

- sitemap - the parent tag for each sitemap listed in the file (a child of sitemapindex)

- loc - the location of the sitemap (a child of sitemap)

- lastmod - the last modified date of the sitemap (optional)

If you want the bot to read the .gz file, you put the .gz name in the index.

https://www.xml-sitemaps.com/ : Maximum 500 page

13. How add robot.txt file?

Ans. Generate robot.txt file, go to crawl section, click robots.txt Tester and Test.

. Google currently enforces a size limit of 500 kilobytes (KB).

14. Introducing the new Search Console 2018

Ans. Google now starting to release this beta version to all users of Search Console, so that everyone can explore this simplified process of optimizing a website's presence on Google Search. The functionality will include Search performance, Index Coverage, AMP status, and Job posting reports.

In status, click performance in search type can filter and compare web, image, video

Click index coverage: click all known pages, all submitted pages: valid, excluded

1. The Benefits of Google Webmaster Tools ( google search console)

Website owners and developers all over the world already use Google Webmaster tools to diagnose, monitor, and optimize their site. And those who aren’t using this free feature to their advantage should start immediately. The awareness you get from monitoring keywords, links, alerts, and manual actions is priceless.

Here are five important uses for Google Webmaster Tools that you should be aware of:

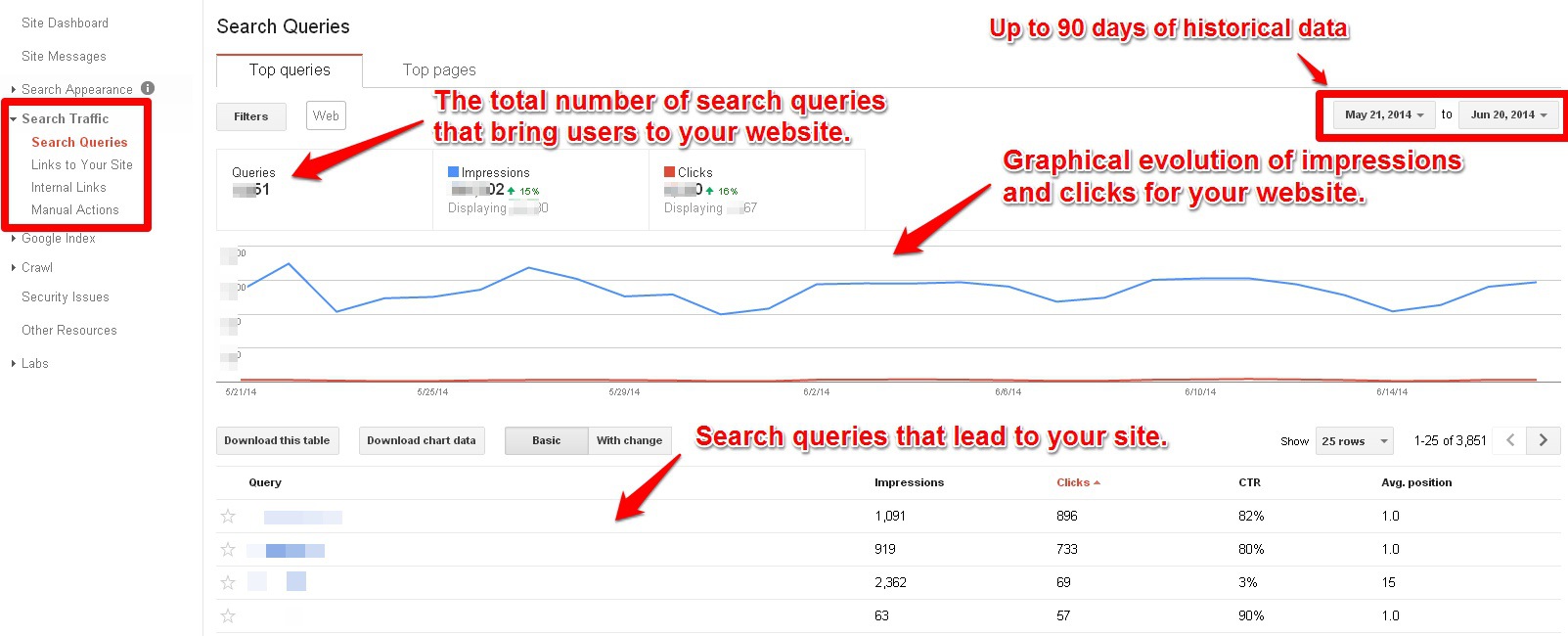

1. Search Queries:

The Search Queries section shows the keywords that led users to your site.

The Search Queries section shows the keywords that led users to your site.

2. Monitor Your Site’s Link Profile:

The second very useful section of Google Webmaster Tools is the Links to Your Site section.

The second very useful section of Google Webmaster Tools is the Links to Your Site section.

3. Get E-mail Notifications About Your Sites Issues/Penalties:

GWT instantly alerts you the moment you’ve been penalized by Google or if there are any other problems with your site.

GWT instantly alerts you the moment you’ve been penalized by Google or if there are any other problems with your site.

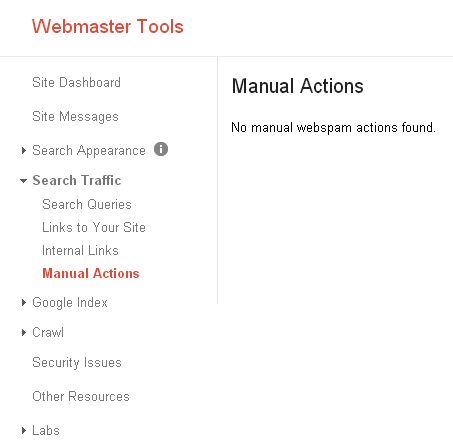

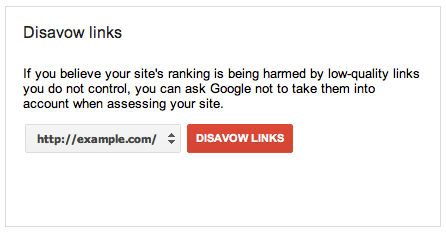

4. Recover Your Site Using The Disavow Tool:

If your site is raising a lot of red flags to Google, it’s going to be reviewed by a human and it will be penalized until the guideline violations are resolved.

If your site is raising a lot of red flags to Google, it’s going to be reviewed by a human and it will be penalized until the guideline violations are resolved.

5. Identify Broken Pages on Your Site:

Looking at the Crawl Errors feature from Webmaster Tools from time to time can save you from disappointing many visitors.

You’ll have data about URL/site related errors that were identified when GoogleBot was crawling your website so you can fix the problems and improve the user experience.

Looking at the Crawl Errors feature from Webmaster Tools from time to time can save you from disappointing many visitors.

You’ll have data about URL/site related errors that were identified when GoogleBot was crawling your website so you can fix the problems and improve the user experience.

6.Links:

The second very useful section of Google Webmaster Tools is the Links to Your Site section. Here you can see where your backlinks (internal and external) come from, as well as the pages they are linking to.

7.Crawl Errors:

The Crawl Errors section shows the errors Google bot encountered on your site. The data you get is similar to what you get when you use the Spider Simulator and it shows inaccessible pages, missing pages, server errors, and all sorts of problems that prevented Google from correctly crawling your site.

8.Sitemaps :

The last section you can access from the Dashboard is the Sitemaps section. Here you see the sitemaps of your site Google has found and the number of URLs in them.By implementing a sitemap, you are informing Google that the pages listed are eligible to be crawled. Ultimately, this will help the pages be indexed. With this resource, you can see sitemaps that have been submitted and, with the graph, the number of submitted pages and indexed pages.

9. Robots.txt;

The robots.txt file is the file that tells Google all of the parts of your site that you do not want Google to access. This tool allows you to see if your robots.txt file is implemented correctly or if there are errors.